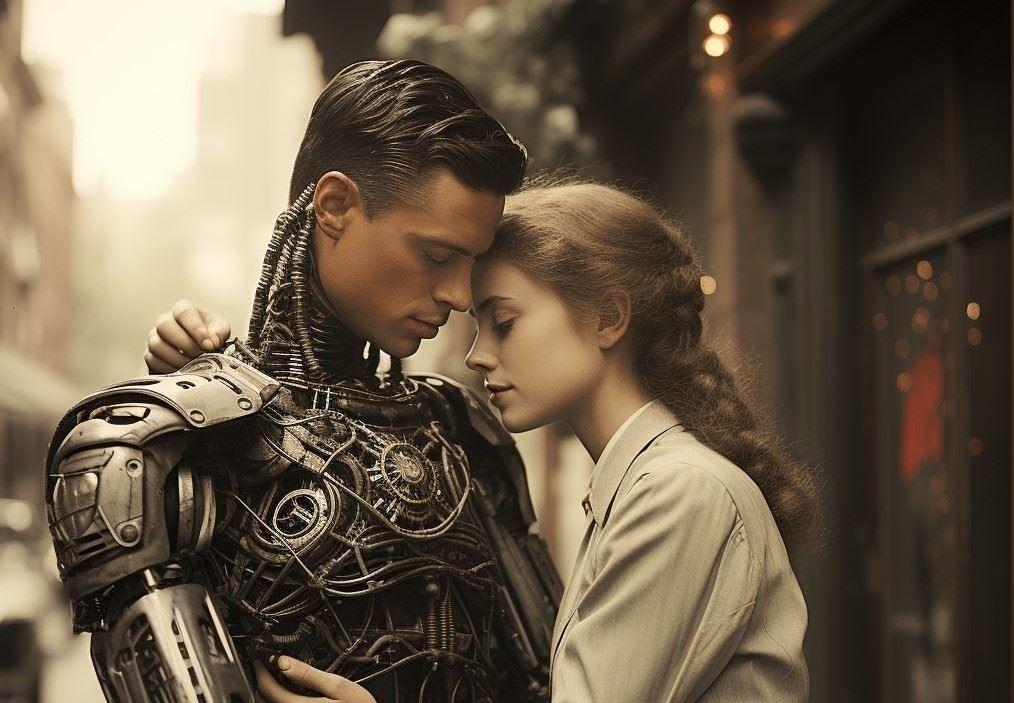

Companionship in the era of AI: A new frontier for relationships and connection

From automating repetitive tasks to transforming entire industries, AI’s impact is broad and deep. However, one of the most profound shifts is happening in a realm we might least expect: human companionship.

As AI systems advance, they are increasingly stepping into roles traditionally reserved for friends, mentors, and even loved ones. This evolution raises important questions about the nature of relationships, emotional bonds, and what it means to be human in the era of AI.

Large language models as personal coaches

Large language models, like OpenAI’s GPT or Google’s Bard, have been trained to process vast amounts of information, generating responses that can simulate human conversation. Beyond their utility for quick answers and task management, these AI systems are being harnessed as personal coaches.

Imagine having access to a virtual mentor that can offer advice on career moves, provide emotional support during tough times, or help you build new habits for personal growth. For many, these AI-driven interactions are a form of companionship—offering consistent, non-judgmental advice anytime, anywhere.

AI-powered personal coaches are appealing for their availability, lack of bias, and customization. They can tailor responses to each user’s unique context, providing personalized insights that might take human coaches weeks or months to fully understand.

As these systems become more sophisticated, they can engage users in deeper conversations about values, aspirations, and life’s challenges.

But while they are convenient and can be remarkably insightful, there is a risk of dependency. Can an AI truly understand human experience, or does it merely mimic empathy through patterns and data?

The difference between simulated and genuine understanding is subtle but crucial—and it’s where the potential for misunderstanding or emotional detachment lies.

AI relationships: Replika and beyond

The concept of having a relationship with an AI isn’t science fiction anymore—it’s reality for millions. Platforms like Replika offer users the opportunity to create AI companions that learn, grow, and evolve based on their interactions.

For some, these digital companions offer solace in loneliness, a place to express thoughts without judgment, or even a substitute for human relationships in times of isolation.

While these AI companions can be comforting, they also raise ethical and psychological questions. What does it mean when people begin to prefer relationships with AI over human connection?

AI offers predictable and controlled relationships without the messiness of human emotions, but it also lacks the unpredictability, spontaneity, and depth that define true companionship. As people increasingly turn to AI for emotional support, are we at risk of diminishing the value of human relationships, which often thrive on complexity and growth through adversity?

Griefbots: AI as a bridge to lost loved ones

In one of the most poignant uses of AI, griefbots have emerged as a way to digitally resurrect loved ones who have passed away. By feeding AI systems with data from text messages, social media posts, and recorded conversations, these programs can mimic the voice and personality of the deceased, allowing people to “speak” with them long after they’re gone.

The intention behind griefbots is compassionate—they offer a way to navigate the pain of loss by creating an illusion of continued connection.

Yet this practice treads into ethically murky waters. On one hand, griefbots can provide comfort and closure. On the other hand, they blur the line between reality and simulation, potentially delaying the natural process of grieving.

The presence of a griefbot can create a liminal space where people are neither fully accepting of the loss nor completely moving on. This raises questions about whether these digital echoes truly honor the memory of the deceased or if they risk distorting the past by reducing a complex human being to a set of data-driven responses.

The broader implications: AI as companions

The rise of AI companions fundamentally challenges our notions of relationship, empathy, and human connection. As AI becomes more capable of filling roles traditionally reserved for humans—whether as coaches, friends, or stand-ins for loved ones—we must grapple with what these relationships mean for society. Do they enhance our lives by offering support where human interaction is scarce, or do they erode the richness of authentic human connection?

One concern is that AI companions might amplify loneliness by providing the illusion of connection without the depth. If people begin to rely on AI for emotional needs, they might invest less in nurturing real-world relationships, which require patience, effort, and mutual growth. The danger lies in settling for the comfort of programmed responses instead of facing the challenges of human relationships that foster resilience and understanding.

However, there’s another perspective that sees AI companionship as an evolution rather than a replacement. For individuals who struggle with social anxiety, isolation, or limited social networks, AI companions can be a gateway to better understanding themselves and eventually building human connections. They could serve as a bridge, offering practice and confidence in interactions that may later translate into real-world relationships.

Conclusion: Navigating a new era of relationships

The integration of AI into our emotional and social lives is just beginning, and it presents both opportunities and risks. As we explore the potential of AI companions, we must do so with a clear-eyed understanding of both their strengths and limitations. AI has the power to transform how we experience companionship, but we must be careful not to lose sight of the irreplaceable value of human connection in the process.

In this era of AI companionship, the challenge will be to balance the benefits of AI-driven support with a continued commitment to nurturing the relationships that make us truly human.

Dominic Ligot is the founder, CEO and CTO of CirroLytix, a social impact AI company. He also serves as the head of AI and Research at the IT and BPM Association of the Philippines (IBPAP), and the Philippines' representative to the Expert Advisory Panel on the International Scientific Report on Advanced AI Safety.